Run OpenAI models free and offline with LM Studio. Step-by-step setup guide covering privacy, zero token costs, and hardware requirements in under 10 minutes.

With OpenAI's open-source models now available for local use, you can run complex AI capabilities without paying per token or sending sensitive data to the cloud. You’ll have control over your data and zero ongoing costs.

This guide will walk you through setting up OpenAI's GPT-OSS models on your computer in under 10 minutes using LM Studio. This is great for:

- Privacy advocates — Run AI without sending data to third parties

- Cost-conscious businesses — Eliminate token costs and API fees entirely

- Developers — Iterate faster with instant local responses

- Remote workers — Access AI capabilities even without reliable internet

What are Local AI Models?

OpenAI's GPT-OSS models (available in 20B and 120B parameter versions) are open-source large language models that can be downloaded and run locally, on your own hardware.

The "B" in these models names refers to billions of parameters—essentially the number of variables the AI uses to make predictions. More parameters generally mean more capabilities but also require more computing resources:

- GPT-OSS-20B: A 20-billion parameter model suitable for most modern laptops and desktops with at least 16GB of RAM

- GPT-OSS-120B: A much larger 120-billion parameter model that requires specialized hardware or high-end workstations

Why Local AI Models matter for businesses

The shift toward local AI deployment represents more than just a technical evolution—it's a fundamental change in how businesses can leverage AI:

Data privacy and security

When you run AI locally, your data never leaves your device. This means:

- Sensitive customer information stays within your organization

- Intellectual property remains protected

- Compliance with regulations like GDPR and HIPAA becomes simpler

- No risk of data interception during transmission

Cost efficiency

Cloud-based AI services typically charge per token (unit of text processed) or API call, which can quickly add up:

- Local models eliminate recurring usage fees entirely

- No surprise bills from unexpected usage spikes

- Predictable, one-time costs for hardware

Operational independence

Running AI locally gives you complete control over your AI infrastructure:

- No dependency on internet connectivity

- No service outages or API downtime

- Freedom from vendor lock-in

- Ability to customize and fine-tune models for specific needs

Speed and latency

Local models can offer significant performance advantages:

- No network latency for requests and responses

- Faster iteration for development and testing

- Consistent performance regardless of internet conditions

Hardware requirements: What you need to get started

To run local AI models effectively, you'll need adequate computing resources:

- Memory (RAM): Minimum 16GB, with 24GB or more recommended for smoother performance

- Storage: At least 15GB of free space for the model files

- Processor: Multi-core CPU (the more cores, the better)

- GPU: While not strictly required for basic usage, a dedicated GPU can significantly improve performance

Most modern business laptops and desktops can run the 20B model with acceptable performance, making it accessible to businesses of all sizes without requiring specialized hardware investments.

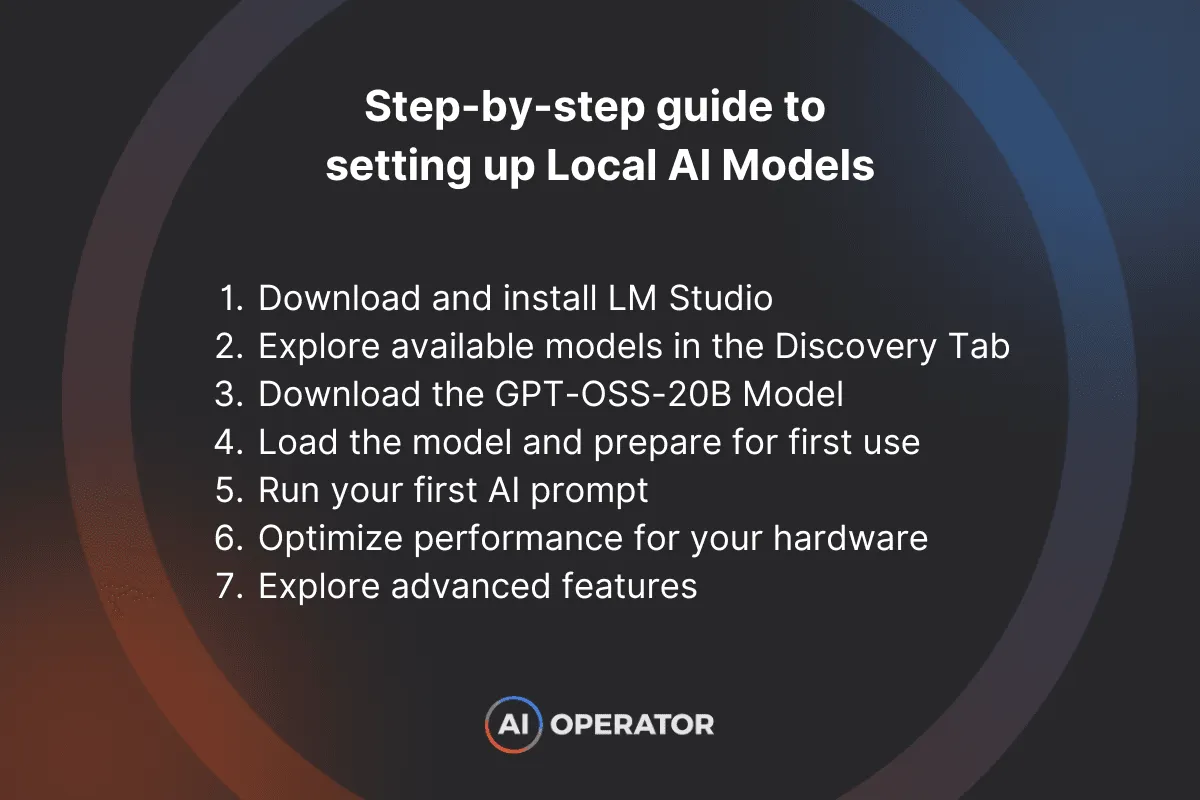

This section walks you through the entire setup process, from installation to running your first AI prompt—all in under 10 minutes.

Step 1: Download and install LM Studio

LM Studio is your gateway to running local AI models on your own computer. This free application serves as an interface for downloading, managing, and interacting with open-source language models.

- Visit the LM Studio website and download the application appropriate for your operating system

- Install the application following the standard installation process for your system

- Launch LM Studio to begin exploring available models

LM Studio provides a clean, intuitive interface that makes working with sophisticated AI models accessible even if you're not a technical expert.

Step 2: Explore available models in the Discovery Tab

Once LM Studio is open, navigate to the "Discover" tab to browse all available open-source models.

The discovery section shows you all models that can run locally on your hardware, including:

- OpenAI's GPT-OSS models (20B and 120B versions)

- Google's Gemma models (2B, 3B, 4B)

- Other open-source models

For each model, you can view:

- Download size

- Hardware requirements

- Model description and capabilities

- Compatibility with your system

Step 3: Download the GPT-OSS-20B Model

GPT-OSS-20B is ideal for most users as it runs well on standard hardware while providing impressive capabilities.

- In the Discover tab, search for "GPT-OSS-20B"

- Review the model details, noting the download size (approximately 12-13GB)

- Click the "Download" button to begin transferring the model to your computer

- Wait for the download to complete (time varies based on your internet connection)

Hardware Requirements: Ensure your computer has at least 16GB of RAM (24GB recommended) and sufficient storage space (15GB minimum) for optimal performance.

The larger GPT-OSS-120B model is also available, but requires significantly more powerful hardware. Most standard laptops and desktops will struggle to run it effectively.

Step 4: Load the model and prepare for first use

After downloading, you'll need to load the model into active memory:

- From the main interface, click "Select Model to Load" at the top of the screen

- Choose "OpenAI GPT-OSS-20B" from your downloaded models list

- Wait for the model to load into memory (you'll see RAM usage increase)

- Monitor the status indicators at the bottom of the screen

Loading the model consumes significant system resources. You'll notice your CPU usage and RAM utilization increase substantially—this is normal and expected behavior.

Step 5: Run your first AI prompt

Now that your model is loaded, you can begin interacting with it:

- Type a prompt in the input field at the bottom of the chat interface

- Select your reasoning effort level (Low, Medium, or High) based on task complexity

- Click "Send" to process your prompt

- Watch as the model generates a response in real-time

For your first test, try something simple like "Write an empathetic five-bullet customer delay email" to verify everything is working correctly.

The response metrics show you exactly how your local model is performing. After generation completes, you'll see:

- Tokens per second (generation speed)

- Total tokens used

- Time to first token

- Generation reason (usually "EOS token found" when complete)

Step 6: Optimize performance for your hardware

To get the best performance from your local model, you should close resource-intensive applications before running LM Studio. For example, web browsers, video editing software, and other RAM-heavy applications can significantly impact model performance.

Adjust these settings for optimal results:

- Set reasoning effort to "Low" for simple tasks or when speed is a priority

- Use "Medium" for balanced performance

- Reserve "High" for complex reasoning tasks where quality outweighs speed

If you experience slow responses:

- Ensure you're using the 20B model rather than 120B

- Check your system's available memory

- Restart LM Studio if performance degrades over time

- Consider formatting requests as bullet points for clearer outputs

Step 7: Explore advanced features

Once you're comfortable with basic operation, explore LM Studio's additional capabilities:

File attachments and RAG (Retrieval Augmented Generation) allow you to upload documents and have the model reference specific information from them.

Other advanced features include:

- Continuing assistant messages

- Branching conversations to explore different response paths

- Regenerating specific messages

- Editing prompts to refine outputs

You can also experiment with different prompt formats to improve output quality:

- Request structured formats like tables or bullet points

- Ask for specific output formats (like JSON)

- Use clear, detailed instructions for better results

The power of local AI comes from both privacy and flexibility. With no token costs or usage limits, you can iterate rapidly and experiment freely with different approaches.

Watch this video for a step-by-step visual guide on how to set everything up:

[YouTube: https://www.youtube.com/embed/Y7kAVwJ8ghc?iv_load_policy=3&rel=0&modestbranding=1&playsinline=1&autoplay=0&mute=1]

Comparing 20B vs. 120B Models

When deciding which model to use, consider these key differences:

GPT-OSS-20B is ideal for:

- Standard laptops and desktops (16GB+ RAM)

- Drafting content and general analysis

- Prototyping and everyday tasks

- Users who prioritize speed and efficiency

GPT-OSS-120B offers:

- Greater depth and consistency in responses

- Better handling of complex reasoning tasks

- More nuanced understanding of context

- But requires workstation-class hardware

For most business applications, the 20B model provides an excellent balance of capability and accessibility, running well on standard hardware while delivering high-quality results.

By following these steps, you'll have a powerful AI assistant running completely on your own hardware—with no internet connection required, no data leaving your device, and absolutely zero token costs.

Running OpenAI's models locally represents a fundamental shift in how businesses can leverage artificial intelligence. By following the steps outlined in this guide, you can deploy powerful AI capabilities on your own hardware with complete privacy and zero ongoing costs. This approach puts you in control of your data while eliminating the financial barriers that have traditionally limited AI adoption.

Key takeaways from implementing local AI models:

- Complete data privacy — Your information never leaves your device, making this ideal for sensitive business operations

- Zero token costs — Eliminate recurring API fees entirely while maintaining enterprise-grade capabilities

- Full offline functionality — Access powerful AI tools even without internet connectivity

- Flexible deployment options — Choose between the 20B model for standard hardware or 120B for more demanding applications

- Rapid iteration — Experiment freely without worrying about usage limits or unexpected bills

Ready to experience the benefits of local AI? Download LM Studio today and book a call with us to kickstart your AI-learning journey!

More Articles

Zapier: How to build AI-powered automations in less than one hour

Build AI-powered Zapier automations in under an hour. Step-by-step guide to automate emails, leads, and workflows with AI intelligence.

Claude for work: How to use Claude Skills and Artifacts to 10x team efficiency

Learn how to use Claude Skills and Artifacts to automate team workflows. Step-by-step guide to building reusable tools without code for 10x efficiency.

AI Connectors 101: Turn your LLM into a business powerhouse

Connect ChatGPT and Claude to your business tools like Gmail, CRM, and Notion. Learn how AI connectors automate workflows and save hours per week.